The Dawn of Agentic Marketing

How AI is changing the landscape of B2B Marketing

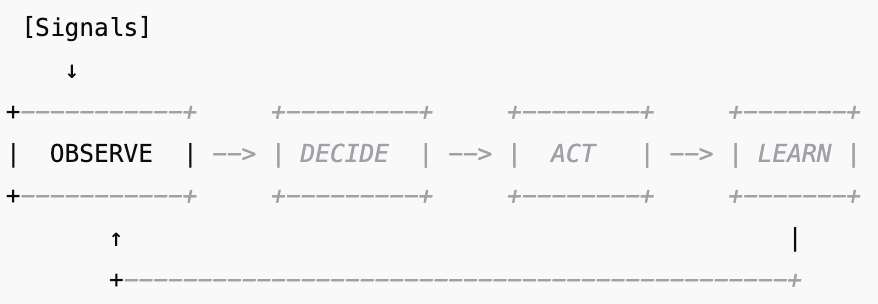

Dashboards don’t move pipeline — agents do. This is about turning the signals you already have into workflows that observe → decide → act → learn, without waiting on weekly meetings or manual hand-offs.

TL;DR

Agentic marketing wires one reliable signal to one high-leverage action, measures the outcome, and uses it to improve the next decision. Start small (one agent), give it guardrails, log every run, and promote the wins that matter: time-to-action, meeting rate, ops debt retired.What “agentic” really means (in marketing terms)

Why now (and why this isn’t just “new automation”)

Three shifts make agentic marketing practical today:

Richer signals, everywhere. Product events, web analytics, ad platform hooks, CRM changes, intent feeds—most are streamable via API or webhook.

Decisioning got cheap. You can mix rules, heuristics, and LLMs to handle ambiguity without re-platforming to a giant CDP.

The stack is callable. iPaaS, CRMs, MAPs, data warehouses, ad platforms—everything exposes endpoints. That means your “playbook” can execute itself.

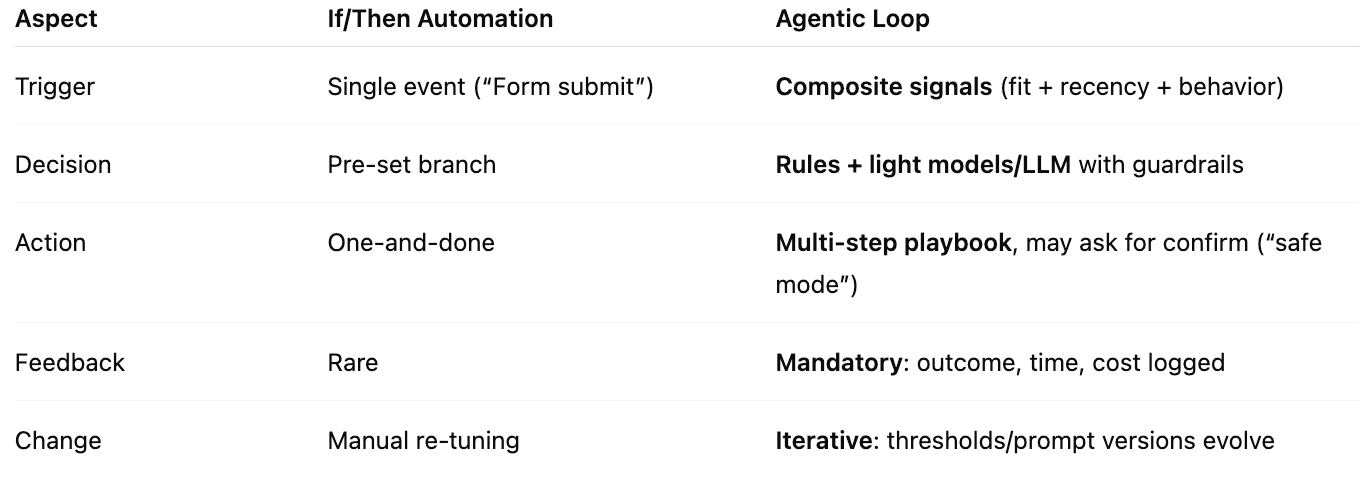

Automation vs. Agentic: what’s the difference?

Classic marketing automation runs a static if/then. Agentic flows close the loop and improve with each run.

The Loop

Packaging wins for career impact

You’re not just building flows; you’re building receipts.

Before/after screenshot (time-to-action chart, reply rates).

1-pager: problem → agent → metrics → quote from a beneficiary (AE/CSM).

Short loom: “Here’s what the system does; here’s the log of outcomes.”

Ask with outcomes: “Fund X hours or Y tool so I can copy this agent to Z use cases.”

Case study (composite, anonymized): Hot Visitor → Fast Lane

Problem: ICP accounts hit the site, but reps respond hours later.

Goal: Cut signal → first touch to minutes; raise meeting rate without blasting everyone.

Minimal stack

Website events → Identify account/person (reverse-DNS or 1st-party)

Enrichment API (company + role)

CRM + Slack (or Teams)

Email/Sales engagement tool

Lightweight store (table) for logs

Decision rules (v1)

ICP fit ≥ 70

Last known activity ≤ 30 days OR intent score ≥ threshold

Page type ∈ {pricing, product, case study} with ≥ 2 pageviews in 15 minutes

Action

Enrich → attach context (pages, persona, firmographics)

Post to #fast-lane with a 1-click button: “Send intro email”

If clicked, create CRM task + draft email with tailored opening line

Log run: decision, responder, time-to-touch, reply status

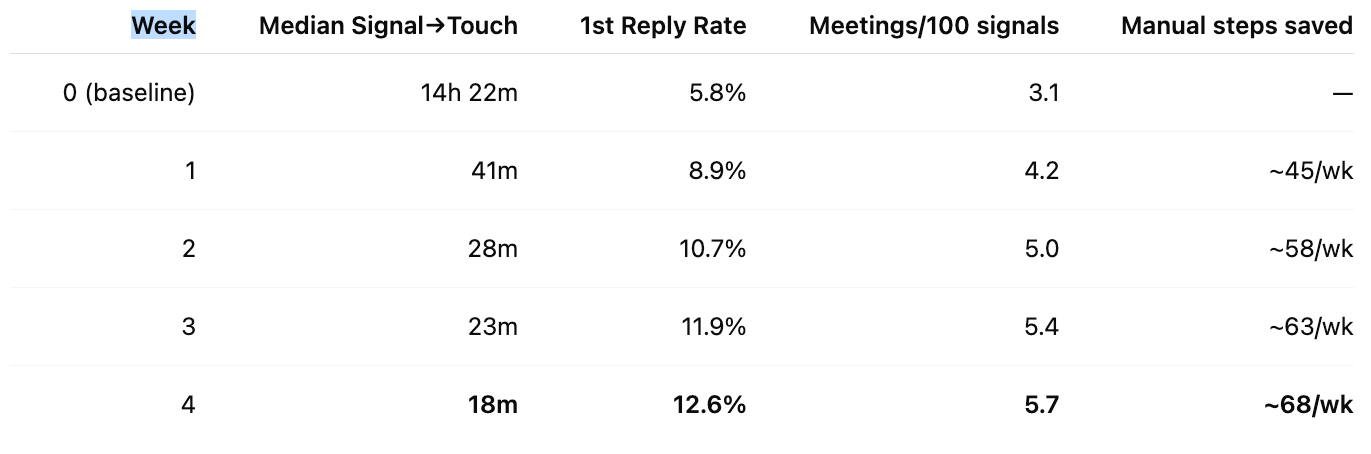

Results after 4 weeks (sample numbers)

What changed?

Tightened page-set; added “repeat visit within 7 days” condition.

Killed noisy vendors; boosted visitors with known champions.

Introduced safe mode at launch (human confirm) → full auto for top-decile fit.

Ship your first agent in 7 days

Day 1 — Pick one signal + one action.

Example: “ICP account views pricing twice in 15 minutes” → “Enrich + alert channel + 1-click email to owning AE.”

Define success: “reply within 48h” or “meeting created.”

Day 2 — Spec the contract.

Required fields, thresholds, failure messages, rollback switch.

Add a lightweight run log table (schema below).

Day 3 — Shadow mode.

Make decisions and draft actions, but do not execute.

Review 20–50 runs for false positives.

Day 4–5 — Safe mode.

Execute only when a human clicks “approve.”

Capture approval latency; refine thresholds.

Day 6 — Full auto (+ guardrails).

Auto-execute on top-decile fits; leave safe mode elsewhere.

Rate-limit actions per account/person/day.

Day 7 — Review + publish the receipt.

Share early metrics (time-to-action, approvals, replies).

Write a 1-pager (before/after, screenshots, who benefited).

What to measure (so you know it’s working)

Time to action (TTA):

first_touch_at - signal_atAction success rate:

successful_actions / total_actions(define “success”)Stage movement:

% of touched deals that advance within X daysOps debt retired: manual touches removed/week; tools disabled; steps deleted

Cost to serve: compute + enrichment + send costs per successful outcome

If a number doesn’t move, you don’t have an agent—you have a cron job. Kill or redesign it.

Failure modes (and how to avoid them)

Zombie automation: No outcome logging.

→ Rule: no log, no run. Every action writes back success/fail + reason.Prompt sprawl: Inconsistent decisions.

→ Fix: templatize prompts; version them; keep test sets.PII creep: Too much data sloshing around.

→ Fix: minimize payloads; redact; encrypt; expire logs.Over-triggering: You blanket the same account.

→ Fix: per-entity rate limits and cool-downs.Ambiguity: The agent doesn’t know when to abstain.

→ Fix: “returnneeds_humanif uncertain” policy + safe mode fallback.

Packaging wins for career impact

You’re not just building flows; you’re building receipts.

Before/after screenshot (time-to-action chart, reply rates).

1-pager: problem → agent → metrics → quote from a beneficiary (AE/CSM).

Short loom: “Here’s what the system does; here’s the log of outcomes.”

Ask with outcomes: “Fund X hours or Y tool so I can copy this agent to Z use cases.”

Copy-paste kit

You are a cautious GTM Ops decisioner. Decide whether to EXECUTE the play

"Enrich → Slack alert → 1-click email to AE" for a web visitor.

Execute only if ALL are true:

- ICP_fit >= 70 (0-100)

- last_activity_days <= 30 OR intent_score >= 65

- recent_pages contains any of ["pricing","product","case-study"] AND pageviews_15min >= 2

If uncertain, return decision="needs_human" with fields_needed.

Output STRICT JSON:

{"decision":"execute|needs_human|deny","reason":"<short>","risk":"low|med|high"}

2) Rules/thresholds (JSON you can keep in a file/record)

{

"play_name": "Hot Visitor → Fast Lane v1.2",

"thresholds": {

"icp_fit_min": 70,

"intent_min": 65,

"pageviews_window_min": 2,

"cooldown_hours_account": 24

},

"allow_pages": ["pricing", "product", "case-study"],

"deny_reasons": {

"low_fit": "ICP fit below threshold",

"cooldown": "Recent action already taken"

}

}

3) Run-log schema (minimum viable)run_id (uuid)

run_id (uuid)

agent_name (text)

signal_ts (timestamptz)

decision (text) -- execute | needs_human | deny

action_ts (timestamptz)

outcome (text) -- sent|failed|approved|canceled

success (boolean)

duration_ms (int)

account_id (text)

person_id (text)

reason (text)

cost_estimate_usd (numeric)

4) KPI SQL (Postgres-ish)

Time-to-action

SELECT date_trunc('day', signal_ts) AS d,

percentile_cont(0.5)

WITHIN GROUP (ORDER BY EXTRACT(EPOCH FROM (action_ts - signal_ts))/60)

AS median_minutes

FROM run_log

WHERE agent_name = 'Hot Visitor → Fast Lane'

AND decision IN ('execute','needs_human')

AND action_ts IS NOT NULL

GROUP BY 1

ORDER BY 1;

Action success rate

SELECT date_trunc('week', signal_ts) AS wk,

100.0 * SUM(CASE WHEN success THEN 1 ELSE 0 END)/COUNT(*) AS success_pct

FROM run_log

WHERE agent_name = 'Hot Visitor → Fast Lane'

GROUP BY 1

ORDER BY 1;

5) Rollout checklistOne signal, one action, one success metric

Shadow mode ≥ 20 runs, review false positives

Safe mode with human approve, log approval latency

Rate limits + cooldowns + rollback switch

Weekly review; version bump on threshold/prompt changes

Another two agents worth cloning (brief)

Champions Tracking

Observe: email reply, doc view, webinar Q by known champion

Decide: champion + opp stage + inactivity > 7 days

Act: task with suggested next step + Slack ping

Learn: did stage advance in 10 days?

Dead Leads → Precise Retargeting

Observe: closed-lost or no response > 45 days

Decide: ICP fit + topic interest from history

Act: sync to paid with topic-matched creative; pause on re-engage

Learn: lift vs. BAU on click-through and form-complete

FAQ (the objections people actually have)

“Don’t we need perfect data first?”

No—start with conservative rules and abstain when uncertain. Improve from outcomes, not opinions.

“Will this spam people?”

Rate-limit, add cool-downs, and measure. Agents that annoy customers get killed by metrics.

“Legal will block this.”

Minimize PII in flight, encrypt at rest, log why an action was taken. Clarity ≠ risk; opacity is.

The big idea to leave with your team

Agentic marketing is not “let’s add more AI.” It’s choosing a few places where speed and precision matter, wiring signal → decision → action → learning, and publishing receipts that prove it moves the number you care about. Do that once, then again, and soon your “automation” budget becomes a pipeline machine.